This tutorial is a part of the series on running stateful workloads on Kubernetes with Portworx. The previous parts covered the architecture and installation of Portworx. This guide will explain how to leverage the features of Portworx such as storage pools, class of service, replicated volumes, shared volumes to achieve optimal performance and high availability of WordPress content management system (CMS).

WordPress is a stateful application that relies on two persistence backends: A file system and MySQL database. The storage service for these two backends should be reliable, secure, and performant. In a scale-out mode, multiple instances of WordPress access the same file system to read and update content such as images, videos, plug-ins, themes, and other configuration files. The storage backend for MySQL should support high throughput and performance during peak usage.

Portworx is an ideal cloud native storage platform to run WordPress on Kubernetes. It has in-built capabilities to handle the scale-out, shared, high-performance requirements of web-scale applications.

Whenever Portworx detects a new disk attached to the cluster, it automatically benchmarks the device to assess its I/O performance. Based on the throughput reported by the benchmark, it creates storage pools that aggregate devices sharing the common characteristics.

In my bare-metal cluster, I have an external USB disk and NVMe device attached to each node. Based on the internal Portworx benchmark results, these two devices are separated into unique pools. During the installation, Portworx created two storage pools classified as low and high based on the IO priority.

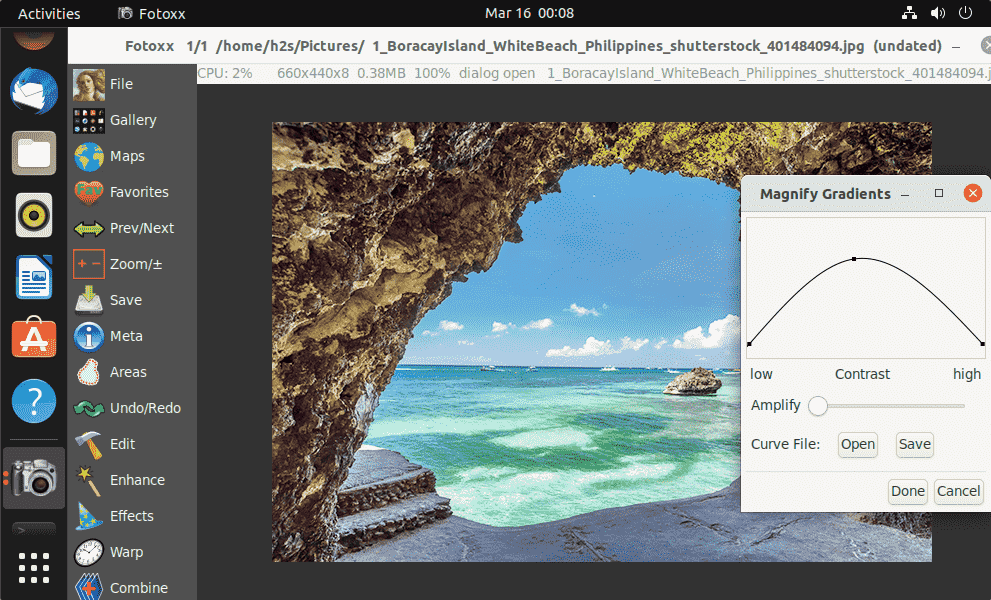

When we run pxctl status command, the output shows the available storage pools.

The devices with lower IOPS are a part of pool 0 while the NVMe devices are a part of pool 1.

By running pxctl service pool show command, we can get additional information about the storage pools.

We can target these pools to create storage volumes aligned with the workload characteristics. For WordPress, we will place the shared file system on the pool 0 while creating the MySQL data and log files on pool 1.

Let’s see how to utilize these storage pools from Kubernetes.

The storage classes act as the medium between the Portworx storage engine and workloads running in Kubernetes. The annotations and parameters specified in the storage class influence how persistent volumes and claims are created. This approach takes advantage of the dynamic provisioning capabilities available in Kubernetes. When a PVC has an annotation with a storage class, Portworx dynamically creates a PV and binds the PVC to it.

For MySQL, we need a volume that’s replicated across three nodes with support for high IOPS. Let’s create a storage class with these parameters.

The parameter, io_profile: “db”, implements a write-back flush coalescing algorithm that attempts to coalesce multiple syncs that occur within a 50ms window into single sync. The flag, priority_io: “high”, indicates that the PV must be created on a storage pool with high IOPS.

The storage class for WordPress has different requirements. It not only needs replication but also a shared volume with the read/write capabilities. Since I/O is not so critical, the volume can be placed on a storage pool with relatively less throughput.

The special flag, io_profile: “cms”, applies to the shared volume that supports asynchronous write operations. This increases the responsiveness of the WordPress dashboard when uploading files to the shared storage volume.

Create the storage classes and proceed to the next step.

The first step in deploying MySQL is creating a PVC that makes use of dynamic provisioning.

Let’s create a dedicated namespace for the deployment.

The annotation in the PVC is a hint to create a PV based on the pre-defined storage class.

We will now create the MySQL deployment with one replica. Note that we don’t need to create a statefulset as the replication is handled by the storage layer. Since the replication factor is set to three, every block is automatically written to two more nodes.

Even if the MySQL pod is terminated and rescheduled on a different node, we will still be able to access the data. This is handled by Portworx’s custom scheduler called Storage Orchestration for Kubernetes (STORK).

In the below spec, we mention STORK as the custom scheduler to delegate the placement and scheduling of the stateful pod to Portworx instead of leaving it to the default Kubernetes scheduler.

Let’s expose the MySQL pods through a ClusterIP service.

SSH into one of the nodes to explore the volumes created by Portworx.

Notice that the volume complies with the settings mentioned in the storage class. It has replica sets across three nodes as shown by the HA and replica sets section. IO priority is set to high forcing the volume to be in the pool created from the NVMe device on each node.

The volume for WordPress has different requirements than MySQL. While it doesn’t demand the throughput as MySQL it needs a shared file system. We specified these parameters in the storage class. Let’s go ahead and create the PVC.

Let’s create the MySQL deployment and service objects.

Let’s now inspect the Portworx volume associated with WordPress deployment.

This volume has both replication and shared flags turned on. It is assigned to the storage pool with low IO priority.

Let’s scale the number of pods of WordPress deployment.

You can access the CMS from the IP address shown in the NodePort.

In the next part of this series, we will explore how to configure snapshots to backup and restore Portworx volumes. Stay tuned.

Portworx is a sponsor of The New Stack.

Feature image by Jessica Crawford from Pixabay.